In modern computing processes form the bedrock of how out applications run and interact with the operating system. Understanding processes is crucial for any software developer or system architect, as this knowledge influences fundamental design decisions in software development. This article explores processes from the ground up, examining their nature, how they communicate, and when to use different inter-process communication mechanisms.

What is a Process

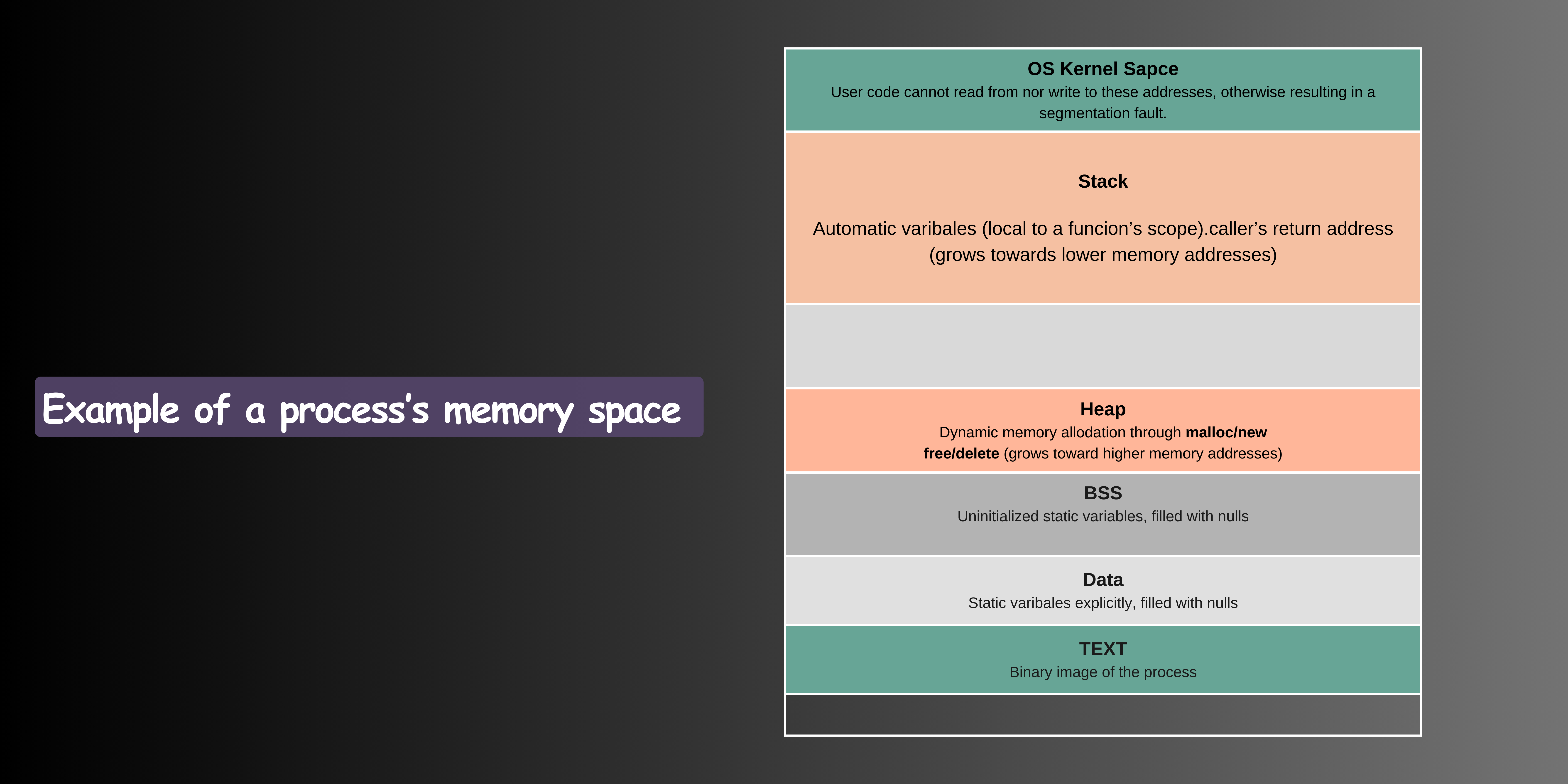

At its core, a process is a program in execution, However, this simple definition belies the complexity of what a process actually represents in a modern operating system. When you launch a program, the operating system creates a process by allocating memory and resources to run that program This process becomes an isolated environment with it's own memory space, containing several key segments :

- Text Segment (Code Segment) : This contains the actual machine code instructions of the program. It's typically read-only to prevent a process from accidentally modifying its own instructions.

- Data Segment: This area holds global and static variables. It's further divided into :

- Initialized data (variables explicitly given a value)

- Uninitialized data (BSS - Block Started by Symbol)

- Heap: A region for dynamic memory allocation. When your program uses

malloc()or new, it allocates memory from the heap. - Stack: Used for function call management, local variables, and program control flow. Each thread within a process has its own stack.

The operating system maintains additional information about each process, including:

- Process ID (PID): A unique identifier

- Process state (running, waiting, stopped, etc.)

- CPU registers and program counter

- Memory management information

- I/O status information

- Accounting information

Process Isolation and Virtual Memory

One of the most important aspects of processes is their isolation from each other. The operating system provides each process with its own virtual address space, creating an illusion that each process has access to all available memory. This isolation serves several crucial purposes :

- Security: Processes cannot directly access each other's memory, preventing malicious or buggy programs from interfering with other processes.

- Stability: A crash in one process doesn't affect other processes, enhancing system reliability.

- Resource Management: The operating system can better manage and allocate resources among processes.

This isolation is implemented through virtual memory, where each process's virtual addresses are mapped to physical memory addresses by the Memory Management Unit (MMU). The mapping is maintained in page tables, which the operating system manages.

Inter-Process Communication (IPC)

While process isolation is beneficial for security and stability, processes often need to communicate with each other. This is where Inter-Process Communication (IPC) mechanisms come into play. The operating system provides several methods for processes to communicate safely:

- Message Passing Message passing allows processes to communicate by exchanging message through the operating system. Common implementations include:

// POSIX message queue example

mqd_t mq;

struct mq_attr attr;

char buffer[MAX_MSG_SIZE];

// Set queue attributes

attr.mq_flags = 0;

attr.mq_maxmsg = 10;

attr.mq_msgsize = MAX_MSG_SIZE;

attr.mq_curmsgs = 0;

// to get or create a message queue

mqd_t mq = mq_open("/queue_name", O_RDWR | O_CREAT, 0644, &attr);

// to send message to the queue

mq_send(mq, buffer, strlen(message), 0);

// to get a message from the queue

mq_receive(mq, buffer, MAX_MSG_SIZE, NULL);

// to close the queue

mq_close(mq);

// to remove the queue

mq_unlink("/queue_name");

This approach maintains process isolation while enabling communication through a controlled channel. However, POSIX message queues have limited operating system support. MacOS and Windows implement message passing differently from the example shown above. In future readings, we'll explore how different operating systems handle message passing, examining their unique implementations and the rationale behind their design choices.

- Shared Memory Shared memory provides the fastest form of IPC by allowing processes to share a region of memory :

// Shared memory example

int shm_fd = shm_open("/shm_name", O_RDWR | O_CREAT, 0644);

// Configure size of shared memory object

ftruncate(shm_fd, 4096);

// Map shared memory object into process address space

void *ptr = mmap(NULL, 4096, PROT_READ | PROT_WRITE, MAP_SHARED, shm_fd, 0);

// to unmap the mapped shared memory

munmap("/shm_name", 4096);

// closing

close(shm_fd);

// to remove the shared memory

shm_unlink("/shm_name");While fast, shared memory requires careful synchronization to prevent race conditions, more on this in future readings.

- Pipes and Named Pipes (FIFOs) Pipes and FIFOs (also known as named pipes) provide a unidirectional interprocess communication channel. A pipe has a read end_ and a write end. Data written to the write end of a pipe can be read from the read end of the pipe.

// This holds file descriptors for the pipe

// pipefd[1] for writing, pipefd[0] for reading

int pipefd[2];

// to create a pipe

pipe(pipefd);

// to read from pipe

read(pipefd[0], buffer, sizeof(buffer));

// to write to pipe

write(pipefd[1], message, strlen(message) + 1);

// to close a pipe

close(pipfd[1])Named pipes (FIFOs) extend this concept to unrelated processes.

In future readings we will dive deep into each of these communication methods, so stay tuned :)

When to Use IPC: Real-World Considerations

The decision to use IPC should be carefully considered based on several factors:

Appropriate Use Cases :

- Service-Oriented Architectures: When different components need different privileges or isolation levels. Example : A web server with separate processes for handling network connections and executing use code.

- Fault Isolation: When system stability requires containing failures. Example : Web browsers running each tab in a separate process.

- Resource Sharing: When multiple processes need controlled access to limited resources. Example : A print spooler managing multiple print jobs from different applications.

When to Avoid IPC :

- Simple Applications: When the overhead of IPC outweighs its benefits. Example: A basic text editor doesn't need process separation between UI and file handling.

- High-Performance Requirement : When frequent, low-latency communication is needed. Example: A real-time video processing applications might prefer threads over processes.

- Development Complexity constraints: When the additional complexity of IPC would make the system harder to maintain.

Best Practices and Design Considerations

When implementing IPC, consider these guidelines:

- Choose the Right IPC Mechanism

- Use message passing for structured communications between independent components

- Use shared memory for high-performance data sharing

- Use pipes for simple, unidirectional data flow

- Handle Errors Appropriately

- Plan for process crashes and network failures

- Implement proper cleanup of IPC resources

- Use timeouts to prevent deadlocks

- Consider Security Implications

- Validate all IPC inputs

- Use appropriate permissions for IPC resources

- Consider the principle of least privilege

Conclusion

Processes and IPC are fundamental concepts in modern operating systems, providing a balance between isolation and communication. While IPC adds complexity to system design, it offers important benefits in terms of security, stability, and modularity when used appropriately. Understanding these concepts and their trade-offs is crucial for making informed architectural decisions in software development.

The key is to use IPC judiciously, choosing it when the benefits of process isolation outweigh the cost of implementation complexity and performance overhead. As with many aspects of software engineering, success lies not in knowing all the available tools, but in knowing when and how to use them effectively.